33

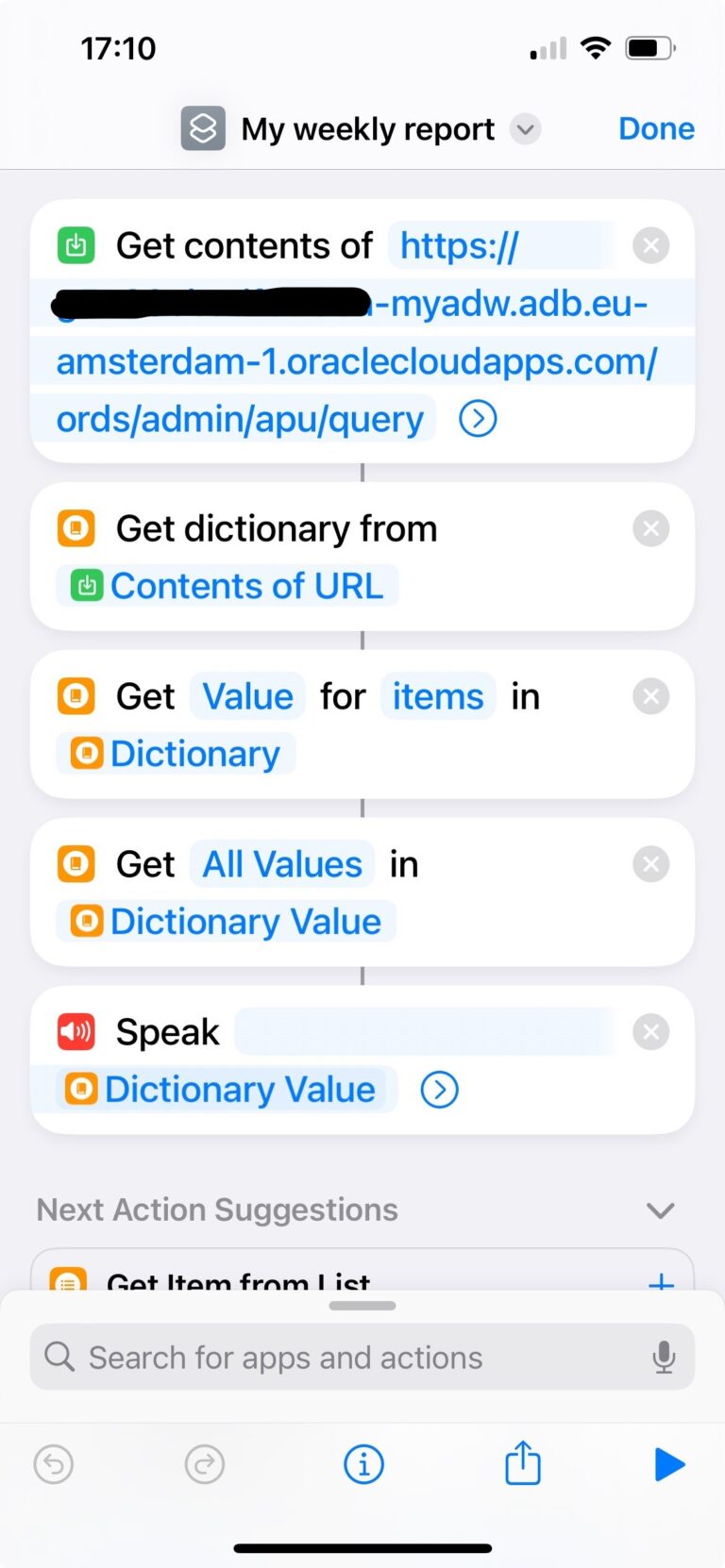

Using my Apple Watch to chat with my Autonomous Database

by Javier

2 comments

How do you build this one from end to end?

Hi Kris,

There are only two things that are not explained in the blog. The first thing you need is an Autonomous Database and some data inside. Then you need to configure Select AI with your favorite LLM. Is just a few sql commands, here you have a blog: https://blogs.oracle.com/machinelearning/post/introducing-natural-language-to-sql-generation-on-autonomous-database

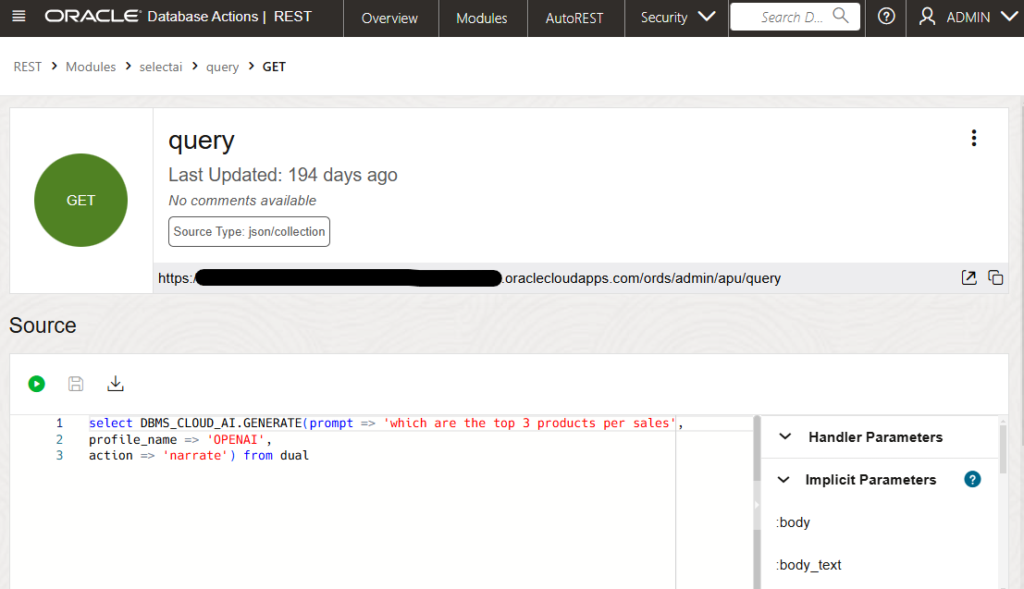

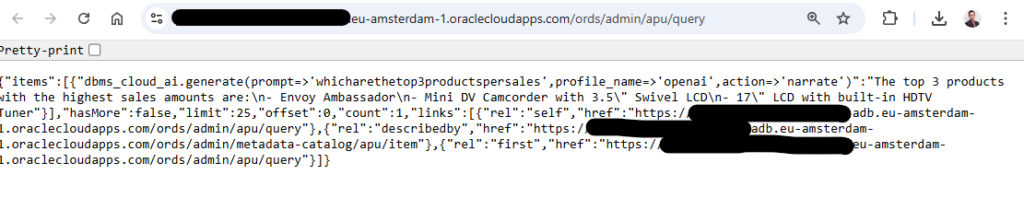

You should be able to use Select AI over the SQL Developer web. Then you need to expose that as a REST endpoint. We have a page with free workshops, including REST for Autonomous here: https://apexapps.oracle.com/pls/apex/r/dbpm/livelabs/view-workshop?wid=815&clear=RR,180&session=11582783738142

Follow the Step 5 from the LiveLab and just add the code you see in the blog.